My Project: A wearable device to notify people if they are touching their face, thereby aiding efforts to reduce face-touching to support public health

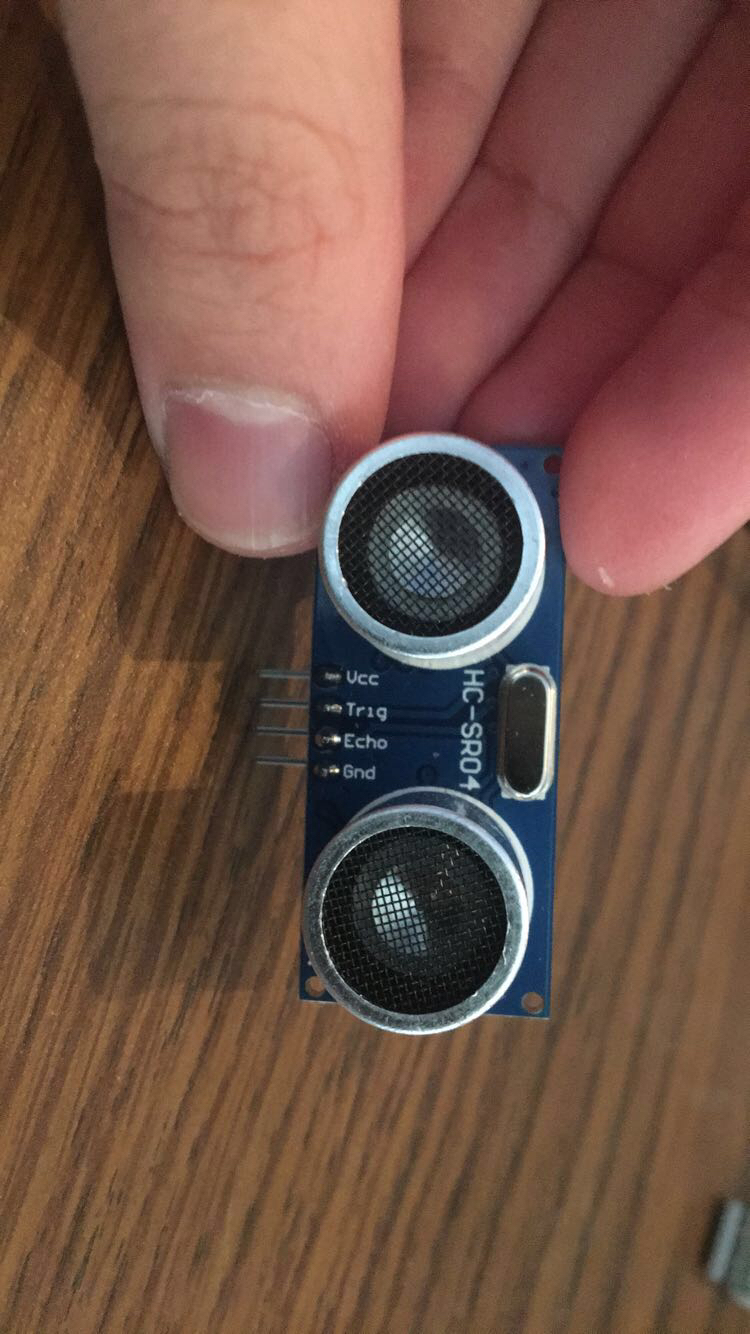

For my first attempt at creating a face-touching sensor, I attempted to use a pair of ultrasonic sensors that I had purchased online. I used a tutorial which can be accessed here to get the sensors measuring their distance from one another. However, as I began to move the breadboards around, I realized that the ultrasonic sensors would not be the best fit for the project. This is because each sensor has a ray of up to approximately 30 degrees where it can read, with the total area covered by the ultrasonic sensor growing smaller as the sensor grows closer to an object. This meant that the sensors could only read each other’s position when the receiving sensor was within that cone.

Given this limitation, I next moved to rigging up the accelerometers that I had purchased to try an alternative approach. Here, I ran into a bit of a problem. I don’t have a soldering iron at home and the accelerometers came with loose pins. Luckily, with Rob’s help, we came up with a fix. I just inserted the male wires into the sensor and twisted them until they were rigid, thereby forming a solid point of contact.

My next step was to actually obtain readings from the accelerometer. To do this, I downloaded the MMA8451 library and used the sensor test example code. While I initially had some challenges getting a read out in the serial monitor, after tinkering with the wiring I finally got it to work!

I decided to use a machine learning approach with the accelerometer to determine when face touching occurred. To train a model though, I first needed to create datasets. I started by touching my face many many times (250+) and then copying the printed data into a .txt file. I then did the same for instances when I was not touching my face. Then, I used Jupyter Notebook (available here) and parsed the text files to load the acceleration and orientation data from both datasets into different np arrays. My plaintext processing code is below:

file1 = 'facetouch.txt'

touchtable = []

with open(file1) as fd:

counter = 1

addlist = []

for line in fd:

fields = line.split()

if len(fields) > 1:

if len(fields) == 7:

addlist.append(float(fields[1]))

addlist.append(float(fields[3]))

addlist.append(float(fields[5]))

elif len(fields) == 3:

addlist.append(line.rstrip("\n"))

counter += 1

if counter % 3:

print(addlist)

if len(addlist) != 0:

touchtable.append(addlist)

addlist = []

Next, I had to turn my arrays into datasets to train and test the model. First, I had to convert the categorical variable strings of orientation into dummy variables so they were readable by the scikit learn functions I was using. To do this, I converted my arrays into dataframes and used the pandas.get_dummies() function. Next, I needed to split my data into train and test sets. To do this I used the sklearn.model_selection.train_test_split() function to take my arrays and convert them into an X dataset for training, a y set of identifiers for training, and a separate X and y test for testing.

Once I did this, I used the sklearn.svm.svc() function to create an svm kernel function based on my data. The final step of the machine learning process was to convert this model into a file usable by Arduino (which works in C). I followed the tutorial available here and eventually saved my model as a .h file. I then modified the code from the accelerometer test code to pass in the data as a set of doubles into the classification function from the .h file. My full code is available for download here

Once I had my code classifying face touches, I created a simple circuit with an LED light that would turn on when the classifier function read a face touch in. My idea was that this would be an unobtrusive way to notify a user that they are touching their face. However, my model was far too sensitive so I had to go back and make a few modifications to my code.

Fine-tuning 1 (class biasing):

First, I realized that the data I was passing in to sklearn was class-biased with face touches being far overrepresented compared to how often they actually occur. So, I took even more data of times I was not touching my face and ran the model again. This improved it, but it was still too sensitive for my tastes.

Fine-tuning 2 (manual code):

My biggest fix was confining the classifier function to only be used when the accelerometer was in a certain 3 orientations. When you touch your face, your wrist is almost always tilted down, facing your body. Because the accelerometer is wrist-mounted, I was able to use this to my advantage to only run the classifier when the initial condition of your wrist being tilted down towards your body (the orientation function from the MMA8451 was a huge help). After doing this, the wrist-mounted breadboard was doing a great job of recognizing face touching!

Because this project was largely confined by material availability during the pandemic, this serves more so as a proof-of-concept than a finished project as I would have liked. Future directions for the project include designing a casing for the electronics, scaling down the build by using a small power source such as batteries or watch batteries, and using a vibrating output device rather than an LED. Overall, I am proud of the finished project though and hopeful that a similar device one day might aid in public health goals!

Finished product: